A new third Era has started, led by Five AI revolutions that will increase Global GDP by 55% which will lead to significant creation, capture, and consolidation of seemingly unrelated markets, geographies, and customer segments which will drive more than $70 billion in growth over the next 10 years.

1. Multimodal, Multi-task, Zero-shot, and Open-Vocabulary

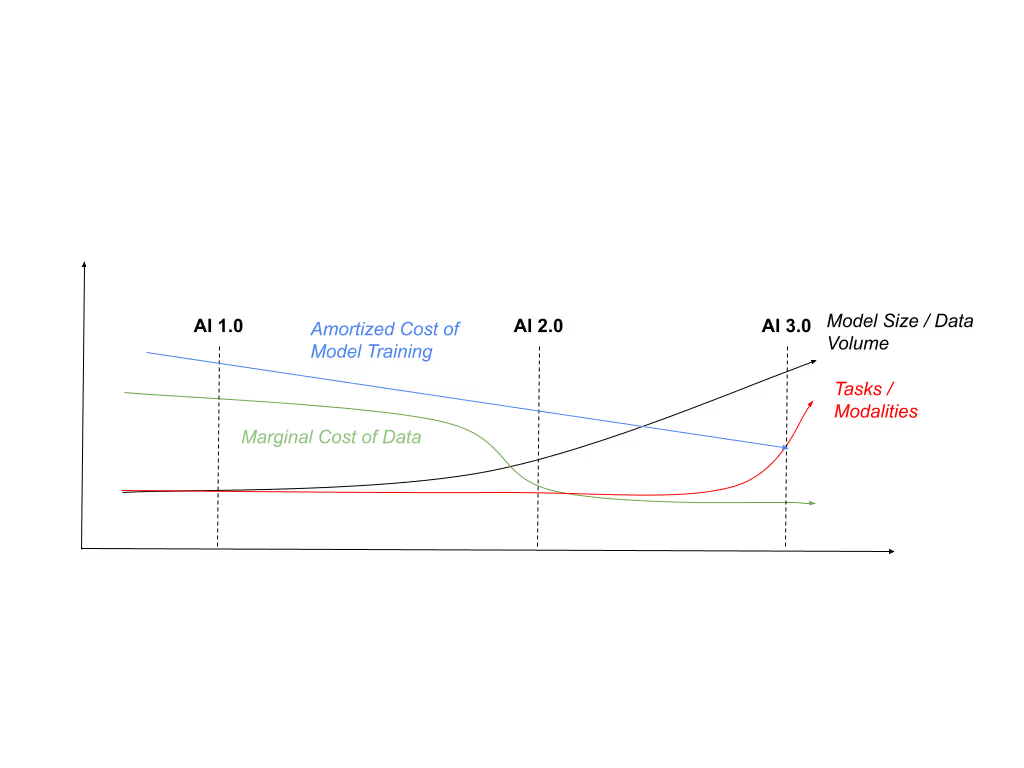

The era of AI 1.0 was narrow. AI 1.0 Models were small, trained on hundreds of thousands of private training examples in order to classify or predict a narrow set of predefined labels. AI 1.0 was driven by the development of new hardware and model architectures which demonstrated state-of-the-art results in image classification, object detection, and semantic segmentation.

In 2018 Universal Language Model Fine-Tuning (ULMFiT) introduced domain-agnostic pretraining, a technique which researchers to train models on a mix of both cheap and ubiquitous domain-agnostic data and expensive and limited datasets covering a single task and a narrow set of defined labels. This marked AI 2.0, which enabled, with larger datasets, the development of larger, more accurate models, well suited to their narrow, predefined tasks. AI 2.0 allowed researchers to scale models using large datasets while reducing the marginal cost of training data needed for training.

AI 3.0 isn’t the era of Large Language Models (LLMs) but the era of Multimodal, Multi-task, Zero-shot and Open-Vocabulary models. These pre-trained models can be designed to take as inputs from images, text, video, depth-maps, or point-clouds (multimodal), and be designed to solve a broad range of tasks, without a limited set of classification labels (open-vocabulary), or task-specific training data (zero-shot). In AI 3.0, new techniques allow researchers the ability to amortize the cost of model development and serving across tasks and modalities, reducing the need for task-specific infrastructure or labelled training data.

2. Architecture, Quality and Inverse-Scaling

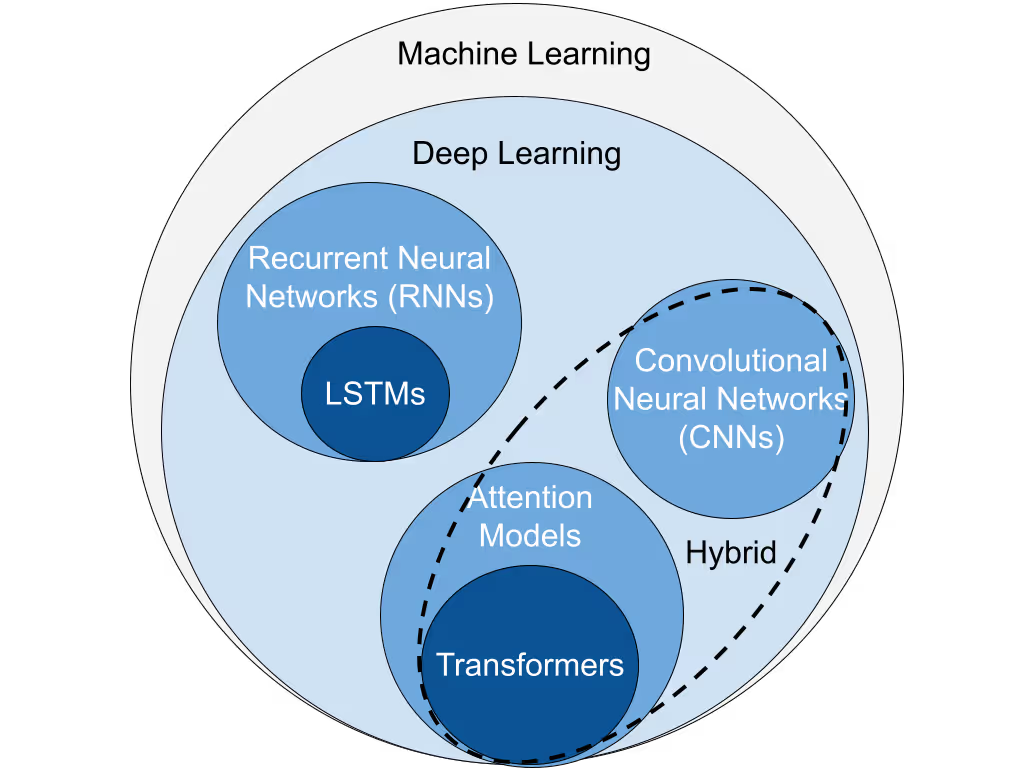

In AI 2.0, modalities were tied to particular model architectures: LSTMs became popular for language modelling, CNNs for image modelling, FFNs for tabular data, and so on. In AI 3.0, the advent of the Transformer Architecture has allowed the same architecture to be reused across increasingly larger datasets, spanning a diverse set of modalities from text to images, and even video.

Transformers are not without flaws, however; Transformers are very memory intensive and hard to train. These memory and training requirements have demanded increasingly large amounts of datasets and have practically limited the size of input Transformers can ingest. Despite these challenges, Transformers have changed the economics of innovation as innovations like FlashAttention, AliBi and MultiQuery which benefit one modality, benefit all modalities. This is deeply profound and has largely characterized the ‘arms race’ which took place between 2017 and 2022 as industrial labs sought to acquire increasingly large data centers in order to scale up their transformer models to larger and larger datasets.

While these increases in model size, data and compute have all driven progress in the past, it’s not obvious that scale is still the answer. Recent works like Chinchilla on model size, Galactica, LLaMa, and Phi1 on pretraining, and Alpaca, LiMa and Orca on fine-tuning all point to the importance of quality over quantity. Furthermore, beyond the practical limits to data acquisition, papers like ‘The Mirage of Scale and Emergence’ and ‘Inverse Scaling: When Bigger Isn’t Better’ demonstrate the limits and harms to scale as, given the capacity, models tend to memorize responses, rather than understand their inputs.

3. Retrieval and Prompting

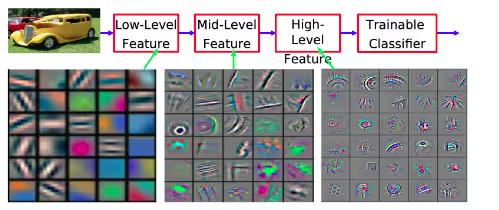

Deep Learning models are simply stacks of smaller, shallow models, which are optimized jointly during the training process to minimize the discrepancy between its models’ final predictions and some labels. Each layer in a Deep Learning model extracts increasingly abstract features from the input data, gradually transforming it into a more meaningful representation. The depth of the model allows for hierarchical learning, where lower layers capture low-level patterns, and higher layers capture more abstract and semantically meaningful mathematical representations.

With the development of Modern Vector Database and Approximate Nearest Neighbour Database Extensions, in 2019 these “semantically meaningful mathematical representations” came to disrupt almost 20 years of search stagnation ruled by the BM25 and PageRank algorithms. Now, AI 3.0 is again disrupting search, powering new experiences like multimodal, and generative search - leading to increased competition and innovation around the web’s most treasured tool.

While Large AI 3.0 Models can often complete tasks without task-specific training data, examples are often necessary to reach the levels of performance and reliability needed in end-user applications. Here, while AI models are disrupting search, search is empowering AI models with live knowledge bases, and ‘textbooks’ of example responses. These examples prompt the models with context on the style of answer required and provide the up-to-date information needed to provide answers which are factually correct.

4. Parameter Efficient Fine-tuning (PeFT), Adaption and Pretrained Foundational Models

The trajectory of AI is a trajectory of economics: how can we minimize our cost or unit of accuracy? In AI 2.0 we managed to reduce the marginal cost of data using large domain agnostic datasets for unsupervised pretraining, and we managed to better amortize the cost of pretraining across tasks using techniques like transfer learning and fine-tuning to repurpose low and intermediate parts of pre-trained AI models. This unlocked fertile ground for pre-trained model repositories like Tensorflow Hub, PyTorch Hub, HuggingFace, PaddleHub, TiMM and Kaggle Models, and later, in 2020, Adapter Hubs to share and compare pre-trained and fine-tuned models.

In AI 3.0 we have not only amortized the cost of pre-training but reduced and amortized the cost of fine-tuning models across modalities and tasks. This next shift is the reason we are seeing the explosion of AI-as-service platforms like OpenAI’s API, Replicate and OctoML, which allows users to share large serverless pre-trained model endpoints.

5. Quantization, Acceleration and Cost

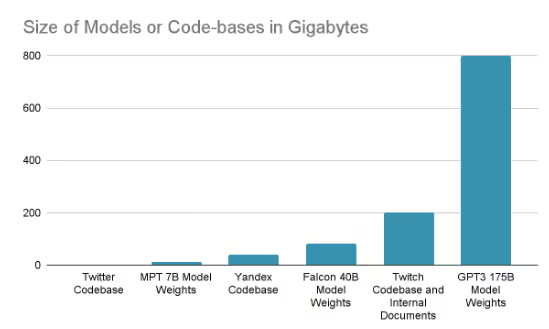

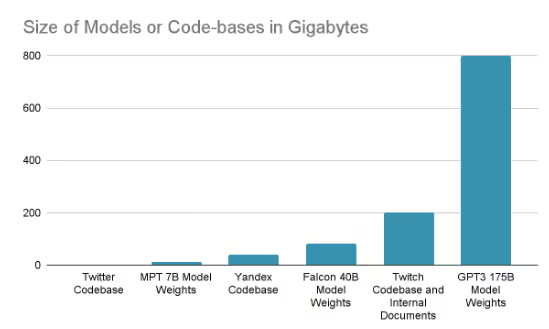

In the 2000s, the Cloud, Microservices, and Serverless changed the economics of the web, unlocking tremendous value for hardware vendors, big tech and small startups. The Cloud reduced the fixed and upfront costs of web hosting, Microservices reduced the unit of development and Serverless reduced the unit of scaling. Large Language Models (LLMs) cannot work with Serverless! Serverless is driven by cold start times, the cost start of a typical AWS Lambda Function is around 250ms; the cost of the average Banana.dev (a serverless AI model hosting platform) cold start is around 14 seconds, or 50x. This is obvious and unavoidable when we think about the size, complexity and dependencies of modern AI models. While the MPT 7B LLM is roughly a third the size of the Yandex codebase user queries may only require 0.1% of the overall codebase at a given time. To generate text from MPT 7B, all 13GBs are needed multiple times for each word. Here, recent innovations in Sparsification (with SparseGPT), Runtime Compilation (with ONNX and TorchScript), Quantization (with GPTQ, QLoRa and FP8), Hardware (with Nvidia Hopper) and Frameworks (with Triton and Pytorch 2.0) serve to reduce model size and latency more 8x while preserving 98% of model performance on downstream tasks, as Pruning, Neural Architecture Search (NAS) and Distillation did in AI 2.0. This radically changes the economics of model serving and may be the driver for OpenAI reducing their API costs twice in one year.